Things are looking really good for ClojureScript Lambdas over Clojure ones. From the results in my previous posts, the cold start times are way better and even just some basic code to list buckets from S3 ran significantly faster. In this post we will try some more intensive computation involving loading a file from S3, parsing into JSON, putting the items in a priority queue, performing calculations over them, and outputting the results back to S3.

Setup

Business Logic

We'll put the business logic in a CLJC file, src/cljc/tax/calcs.cljc:

(ns tax.calcs)

(defrecord PriorityQueue [data])

(defn qcons [{:keys [data] :as q} v]

(PriorityQueue. (update data v conj v)))

(defn qempty [{:keys [data] :as q}]

(PriorityQueue. (empty data)))

(defn qseq [{:keys [data] :as q}]

(when (seq data)

(sequence

(mapcat val)

data)))

(defn qpeek [{:keys [data] :as q}]

(some-> data first val first))

(defn qfirst [q] (qpeek q))

(defn qpop [{:keys [data] :as q}]

(PriorityQueue.

(let [v (qpeek q)

updated-data (update data v qpop)]

(if (empty? (get updated-data v))

(dissoc updated-data v)

updated-data))))

(defn qnext [q] (qpop q))

(defn priority-queue

([comparator] (PriorityQueue. (sorted-map-by comparator)))

([comparator items] (reduce qcons (priority-queue comparator) items)))

(defn compare-items [a b]

(compare (:a a) (:a b)))

(defn calculate-aux [queue]

;; realizing the items with mapv to print calc time

(mapv

(fn [{:keys [a b c d] :as item}]

(let [x (+ a b c d)

y (/ x c)

z (* y a b c d)]

{:x x :y y :z z}))

(qseq queue)))

(defn calculate [items]

(prn "QUEUING")

(let [queue (time (priority-queue compare-items items))]

(prn "CALCULATING")

(time (calculate-aux queue))))

I'm using a priority queue here rather than just sorting as I use them heavily in my real workloads, so I wanted to compare the performance with them in the mix. The priority queue implementation here was picked apart from a Clojure one I had done that looked like this:

(ns tax.priority-queue

(:import (clojure.lang IPersistentStack IPersistentCollection ISeq)))

(deftype PriorityQueue [data]

IPersistentStack

(peek [q] (some-> data first val first))

(pop [q] (PriorityQueue. (let [v (peek q)

updated-data (update data v pop)]

(if (empty? (get updated-data v))

(dissoc updated-data v)

updated-data))))

IPersistentCollection

(cons [q v] (PriorityQueue. (update data v conj v)))

(empty [q] (PriorityQueue. (empty data)))

ISeq

(seq [q] (when (seq data)

(sequence

(mapcat val)

data)))

(first [q] (peek q))

(next [q] (pop q))

Object

(toString [q] (str data)))

This was much nicer because it plugged into the core Clojure interfaces, but those were not available in ClojureScript, so the easiest thing to do seemed to be to just pick it apart.

I'll need to do some JSON parsing, so I'll add a dependency for that in the Java code (JSON parsing is built into ClojureScript, so no need for an addition dependency there). Jsonista is supposedly among the fastest for JSON parsing, so I'll try that in deps.edn:

{:paths ["src/clj" "src/cljc"]

:deps {software.amazon.awssdk/s3 {:mvn/version "2.17.100"}

metosin/jsonista {:mvn/version "0.3.5"}}

:aliases {:build {:deps {io.github.clojure/tools.build {:tag "v0.7.2" :sha "0361dde"}}

:ns-default build}

:profile {:extra-paths ["dev/clj"]

:deps {software.amazon.awssdk/sqs {:mvn/version "2.17.100"}

software.amazon.awssdk/sso {:mvn/version "2.17.100"}}}}}

Then I need to update src/clj/tax/core.clj:

(ns tax.core

(:require [jsonista.core :as json]

[clojure.string :as s]

[clojure.java.io :as io]

[tax.calcs :refer [calculate]])

(:import (software.amazon.awssdk.services.s3 S3Client)

(software.amazon.awssdk.services.s3.model GetObjectRequest PutObjectRequest)

(software.amazon.awssdk.core.sync RequestBody))

(:gen-class

:methods [^:static [calculationsHandler [Object] Object]]))

(def client (-> (S3Client/builder) (.build)))

(def output-bucket (System/getenv "CALCULATIONS_BUCKET"))

(defn put-object [bucket-name object-key body]

(.putObject client

(-> (PutObjectRequest/builder)

(.bucket bucket-name)

(.key object-key)

(.build))

(RequestBody/fromString body)))

(defn get-object-as-string [bucket-name object-key]

(-> (.getObjectAsBytes client (-> (GetObjectRequest/builder)

(.bucket bucket-name)

(.key object-key)

(.build)))

(.asInputStream)

io/reader

slurp))

(def mapper

(json/object-mapper

{:encode-key-fn name

:decode-key-fn keyword}))

(defn ->items [input]

;; realizing the items with mapv to print parse time

(mapv

(fn [line]

(json/read-value line mapper))

(s/split input #"\n")))

(defn ->json-output [items]

(s/join "\n" (map #(json/write-value-as-string % mapper) items)))

(defn -calculationsHandler [event]

(let [[{message-body "body"}] (get event "Records")

props (json/read-value message-body)

bucket (get props "bucket")

key (get props "key")

_ (prn "GETTING OBJECT")

input (time (get-object-as-string bucket key))

_ (prn "PARSING INPUT")

input-lines (time (->items input))

calculated-items (calculate input-lines)

_ (prn "CONVERTING TO OUTPUT")

output-string (time (->json-output calculated-items))

_ (prn "PUTTING TO OUTPUT")

put-result (time (put-object output-bucket key output-string))]

put-result))

and src/cljs/tax/core.cljs:

(ns tax.core

(:require [cljs.core.async :as async :refer [<!]]

[cljs.core.async.interop :refer-macros [<p!]]

[clojure.string :as s]

["aws-sdk" :as aws]

[tax.calcs :refer [calculate]])

(:require-macros [cljs.core.async.macros :refer [go]]))

(def client (aws/S3.))

(def output-bucket js/process.env.CALCULATIONS_BUCKET)

(defn put-object [bucket-name object-key body]

(.promise (.putObject client #js{"Bucket" bucket-name

"Key" object-key

"Body" body})))

(defn get-object [bucket-name object-key]

(.promise (.getObject client #js{"Bucket" bucket-name,

"Key" object-key})))

(defn get-object-as-string [bucket-name object-key]

(go (let [resp (<p! (get-object bucket-name object-key))

body (.-Body resp)]

(.toString body "utf-8"))))

(defn ->items [input]

;; realizing the items with mapv to print parse time

(mapv

(fn [line]

(js->clj (js/JSON.parse line) :keywordize-keys true))

(s/split input #"\n")))

(defn ->json-output [items]

(s/join "\n" (map (comp js/JSON.stringify clj->js) items)))

(defn handler [event context callback]

;; only grabbing a single message at a time, so we can just get the first.

(go (let [message-body (get-in (js->clj event) ["Records" 0 "body"])

props (js/JSON.parse message-body)

bucket (.-bucket props)

key (.-key props)

_ (prn "GETTING OBJECT")

input (time (<! (get-object-as-string bucket key)))

_ (prn "PARSING INPUT")

input-lines (time (->items input))

calculated-items (calculate input-lines)

_ (prn "CONVERTING TO OUTPUT")

output-string (time (->json-output calculated-items))

_ (prn "PUTTING TO OUTPUT")

put-result (time (<p! (put-object output-bucket key output-string)))]

(callback nil put-result))))

Note that I'm very naively reading the whole S3 object into a string, parsing it, then later building up another result string, that I put back to S3. I would normally stream the object line by line into my code and stream the results out line by line so that I could handle millions of lines without worrying about going out of memory, but I didn't want to have to convert to the V3 JS SDK to do the streaming as it would have required me to bundle dependencies and I wanted to keep things simple for now. Plus it seemed valuable to compare the performance with the naive solution anyway. I'll investigate the streaming performance differences in a later post.

After deploying, I create a JSON file and put it into my input bucket. The file looks like this but with 10K lines:

{"a":7208,"b":8222,"c":3079,"d":8034}

{"a":4373,"b":4571,"c":9613,"d":6360}

{"a":6475,"b":9061,"c":7890,"d":7405}

{"a":6313,"b":9926,"c":2113,"d":3585}

{"a":6735,"b":1542,"c":2977,"d":7342}

{"a":4769,"b":6147,"c":8894,"d":8591}

{"a":5291,"b":4264,"c":379,"d":201}

{"a":6250,"b":9358,"c":4807,"d":5538}

{"a":9794,"b":4387,"c":6253,"d":5677}

{"a":8836,"b":5336,"c":465,"d":7694}

Then in the Lambda console I test each Lambda with the following input (just changing the body from the provided SQS event example):

{

"Records": [

{

"messageId": "19dd0b57-b21e-4ac1-bd88-01bbb068cb78",

"receiptHandle": "MessageReceiptHandle",

"body": "{\"bucket\": \"tax-engine-experiments-2-transactionsbucket-78gg1f219mel\", \"key\": \"test.json\"}",

"attributes": {

"ApproximateReceiveCount": "1",

"SentTimestamp": "1523232000000",

"SenderId": "123456789012",

"ApproximateFirstReceiveTimestamp": "1523232000001"

},

"messageAttributes": {},

"md5OfBody": "{{{md5_of_body}}}",

"eventSource": "aws:sqs",

"eventSourceARN": "arn:aws:sqs:us-east-1:123456789012:MyQueue",

"awsRegion": "us-east-1"

}

]

}

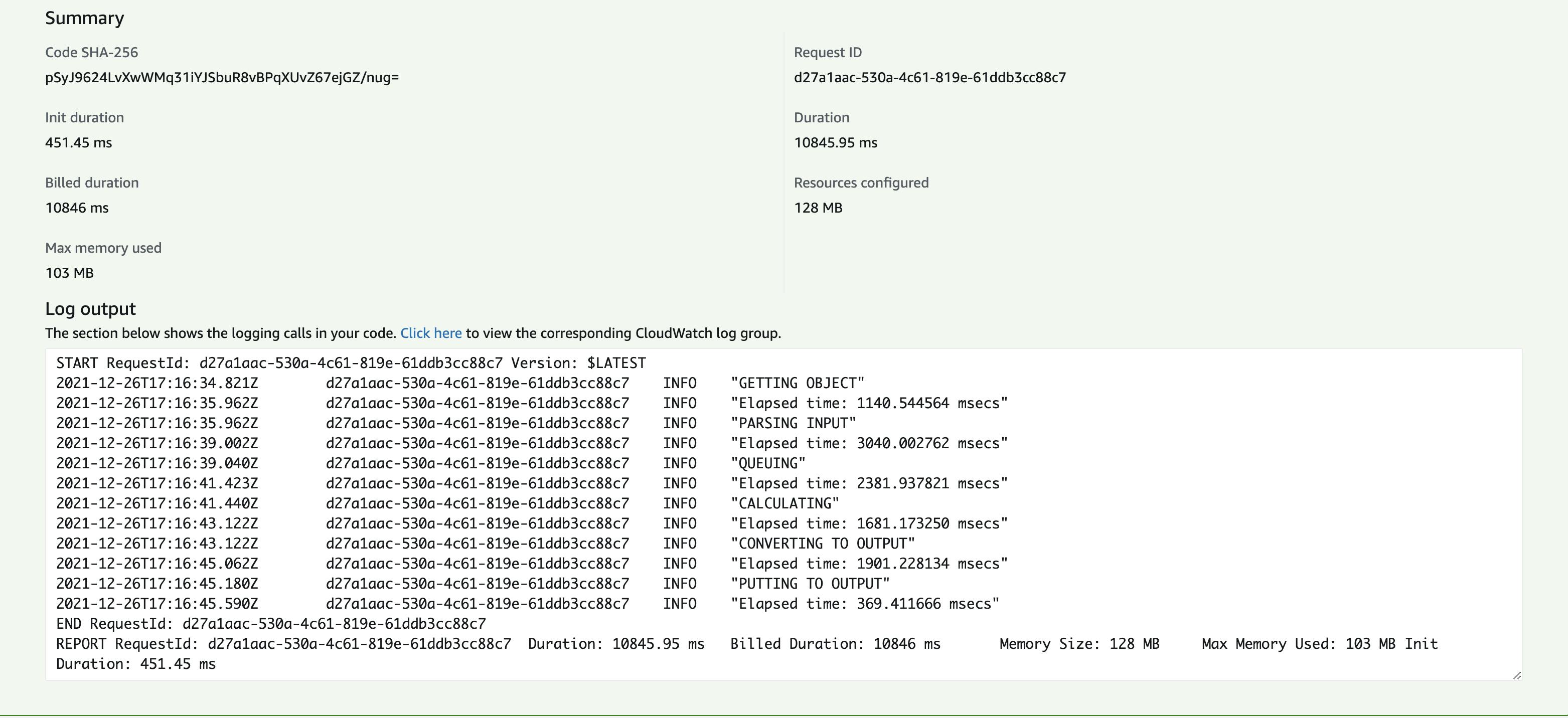

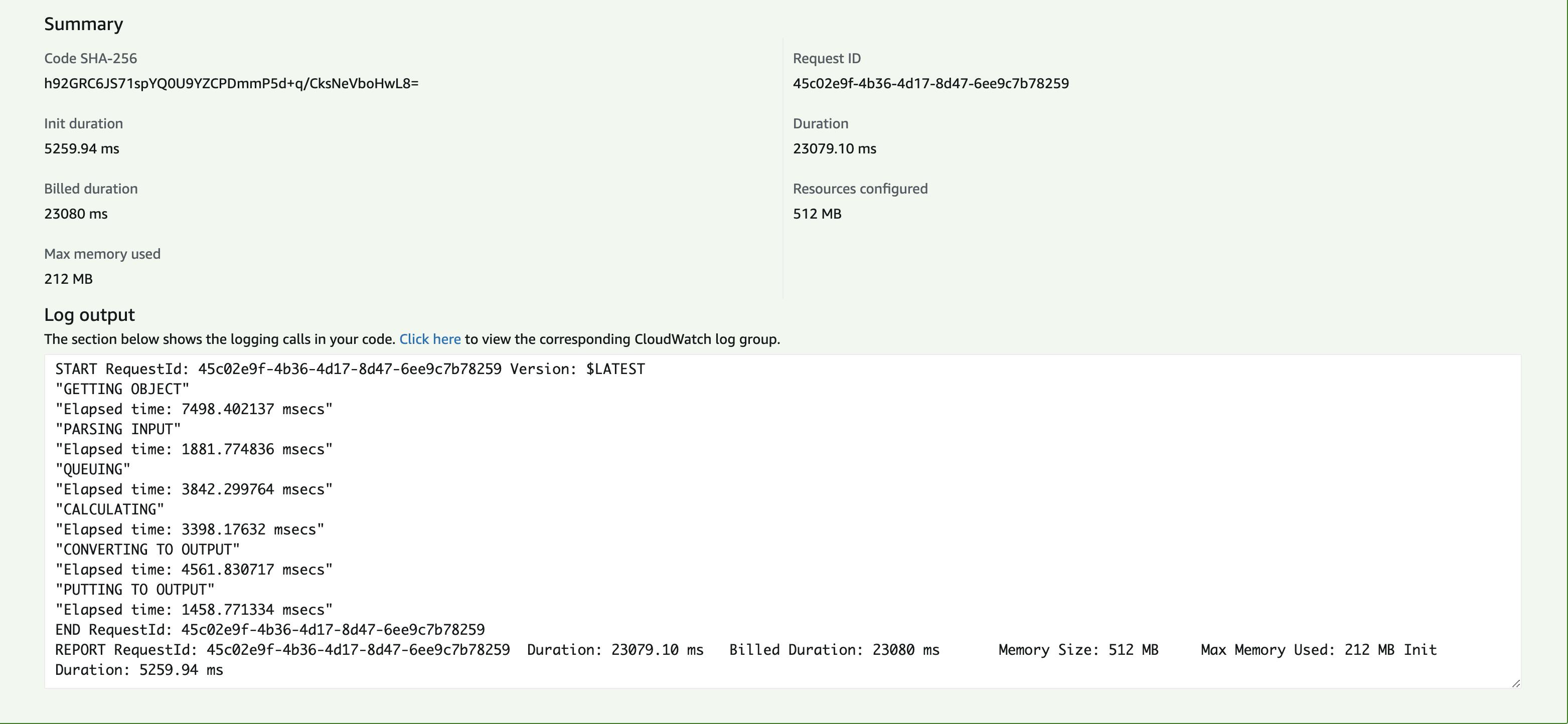

The results are, again, quite surprising:

ClojureScript:

And Clojure:

To summarize:

| Operation | Clojure | ClojureScript | Clojure / ClojureScript |

| GetObject as string | 7498.402137 | 1140.544564 | 6.57X |

| Parse to JSON | 1881.774836 | 3040.002762 | 0.62X |

| Enqueuing | 3842.299764 | 2381.937821 | 1.61X |

| Calculating | 3398.17632 | 1681.173250 | 2.02X |

| Serialize to JSON | 4561.830717 | 1901.228134 | 2.4X |

| PutObject results | 1458.771334 | 369.411666 | 3.95X |

| Init | 5259.94 | 451.45 | 11.65X |

| Memory Used | 212 MB | 103 MB | 2.06X |

| Total Duration | 23079.10 | 10845.95 | 2.13X |

| Total Duration - Init Duration | 17819.16 | 10394.5 | 1.71X |

Wow, ClojureScript outperformed Clojure in nearly every metric here! Now these metrics are just for a single run, so I'll break out the SQS blaster again and see what the durations look like:

(ns tax.profile

(:import (software.amazon.awssdk.services.sqs SqsClient)

(software.amazon.awssdk.services.sqs.model SendMessageRequest)))

(def queues (map

(fn [suffix]

(str "https://sqs.us-east-1.amazonaws.com/170594410696/tax-engine-experiments-2-run-calcs-queue-" suffix))

["clj" "cljs"]))

(defn profile []

(doseq [queue queues]

(let [sqs (-> (SqsClient/builder) (.build))

req (-> (SendMessageRequest/builder)

(.queueUrl queue)

(.messageBody "{\"bucket\": \"tax-engine-experiments-2-transactionsbucket-78gg1f219mel\", \"key\": \"test.json\"}")

(.build))]

(dotimes [i 1000]

(.start (Thread. (fn [] (.sendMessage sqs req))))))))

| Lang | avg(@initDuration) | avg(@duration) | count(@initDuration) | count(@duration) |

| Clojure | 5184.5053 | 1940.1077 | 32 | 1002 |

| ClojureScript | 465.752 | 8027.65 | 99 | 1001 |

Excluding cold start invocations, with the following query:

stats avg(@duration) | filter isempty(@initDuration)

We get:

| Lang | avg(@duration) |

| Clojure | 1198.9986 |

| ClojureScript | 7665.5545 |

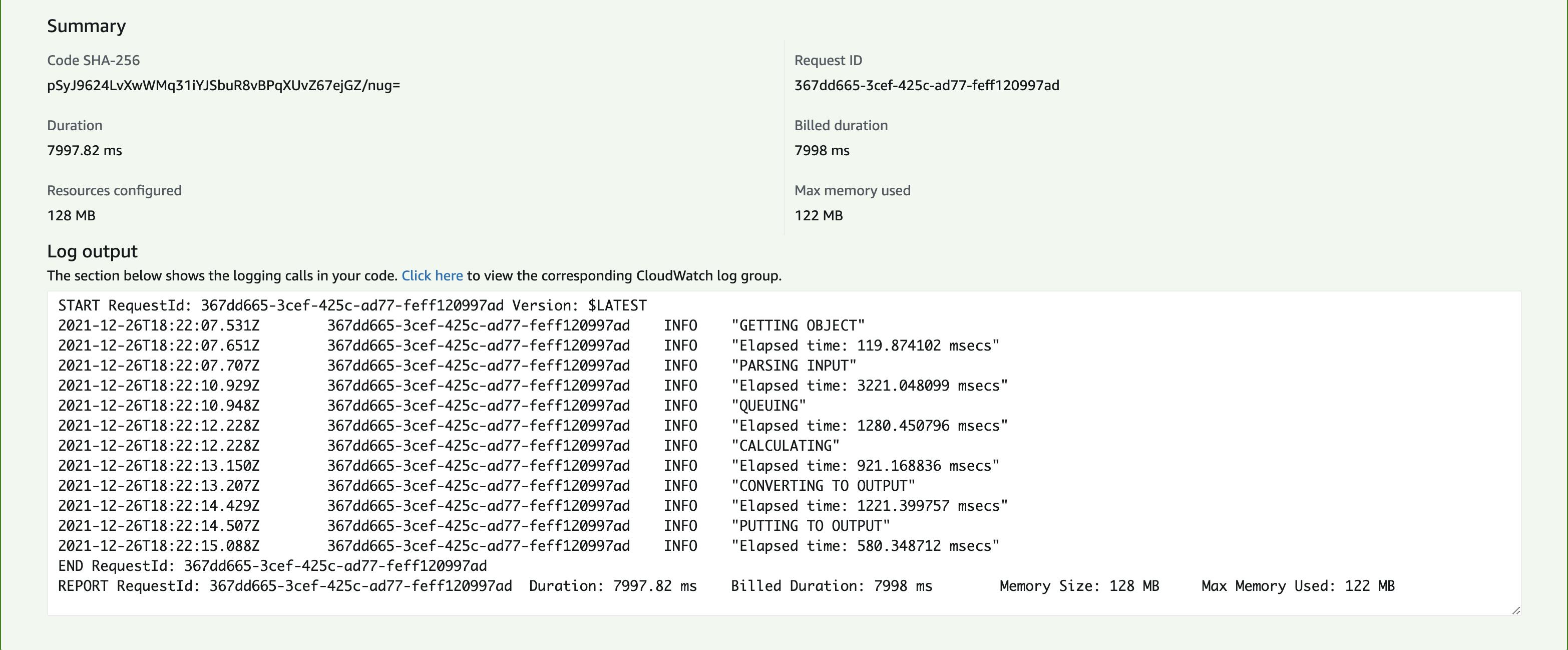

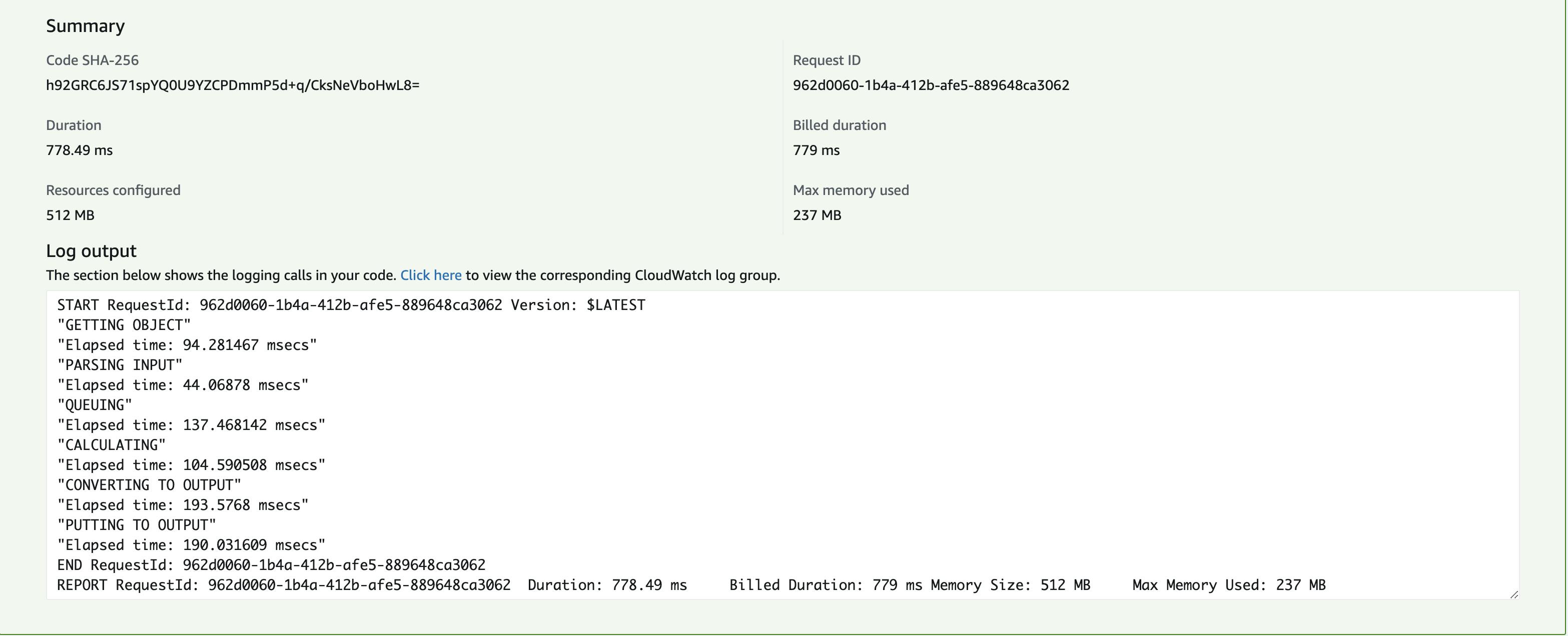

More surprising results! It seems that when the Clojure version is fully warmed, it is more than 6X faster! Let's run the warmed versions in the Lambda console to see the breakdowns again:

ClojureScript:

Clojure:

Summary

So it does seem that Clojure Lambdas can run significantly faster on more compute-intensive workloads than the equivalent ClojureScript Lambdas, at least with my naive code S3 read and write code. The S3 interactions could be significantly tuned to the strengths of each language, I'm sure, but the mathematical calculations and data structures code are where my biggest concerns have been as far as ClojureScript goes. Those do seem to be nearly 10X as fast in Clojure vs. ClojureScript. I wonder if these differences are due to the languages or the runtimes or both. Next time I'll add equivalent Java and JavaScript lambdas to investigate.